Power Tips to get Drums to Stand Out article is aimed at unleashing the sound designer in you. We all have one and he/she is that voice that creeps into your head and says things like ‘You know you want to add copious amounts of distortion to that snare don’t you? Yes, you do. Go on, do it. Do it!’ or ‘You call that a kick drum? You just know that it needs 8 compressors in series to bring up some of that fluffy low end don’t you? I said don’t you?’

The battle is very real.

Let’s take some time out, crush some Prozac into the 9 gallons of espresso we are about to hit, and discuss the pedantic differences between a mix engineer and a producer.

In days gone by, also known as ‘the bad hair years’, the roles of the engineer and producer were clearly defined. A studio engineer’s job involved managing and maintaining all forms of hardware, operating and maintaining finger slicing tape machines, routing and patching effects and dynamics, miking up sessions, making coffee, laughing at the singer’s jokes, cleaning the studio, making more coffee, befriending the drummer….and conducting what we in the industry refer to as a level and pan mix. This entailed gain staging the entire mix and panning sounds for correct spot pan placement and to alleviate the problems associated with clashing, masking and summing of frequencies. The producer, on the other hand, would walk in when the level and pan mix was completed and begin to colour the mix creatively. Yes, the producer also had additional roles like managing and directing the artistes, bringing in external musicians where needed, acting as the mediator between the label and studio and so on, but the real aim of the producer was to colour the mix creatively and optimise it for the genre it was aimed for. For this reason alone we would often buy albums because we liked the style and creative input of a particular producer. Producers had their own followers.

Nowadays, mix engineers or producers or beat makers or whatever is the politically correct and in fashion tag to use, have to manage almost all areas of the recording, mixing, mastering, delivering and marketing processes. The role of the producer has changed dramatically. What hasn’t changed is the creative aspect of production. This is what defines a producer’s signature – the colour associated with a production. For this reason alone more and more producers are exploring sound design as a means to realise their creative aims.

So, let me share with you some techniques I use to colour drums sounds and beats and hopefully it will prompt the sound designer in you to share equal stage with your mixing self.

Parallel Magic

Parallel Processing has been around for decades and it is an extremely flexible and potent way to process sounds. It originally began with using hardware (consoles) but the setting up and configuring is pretty much the same for software.

The idea is quite simple: within the DAW you create a copy of the track/channel you want to process and apply all processing to the copied track. You then mix the processed track with the original unprocessed track. In effect, both tracks are run in parallel. I use both track and channel to describe this process as different DAWs seem to use either to describe a path where audio or data is carried. A variant of this technique involves using an auxiliary channel for the processing and sending the unprocessed track/channel to the auxiliary channel where the processor/s sits. The beauty of using this send/return scenario is that it affords you control over how much of the processing channel is required by using varying send amounts from the unprocessed channel. It also allows other tracks to be sent to the same processing channel, again with varying send amounts.

The most common use for parallel processing, and one that I am sure you have come across, is that of parallel compression (a.k.a New York Compression). This involves heavily compressing the copied track/channel or auxiliary and mixing it with the dry unprocessed track. However, this is not where parallel processing starts and ends. You can use any type of effect or processor on the copied track/channel.

Parallel processing can be used on just about any sound – be it vocals, bass, guitars, drums and so on. However, if you do decide that parallel processing is for you then you need to consider the single most obvious problem that you might be presented with – that of latency (time delay). Adding plugins (processors) to the copied channel can result in a slight time delay caused by the time it takes for plugins to process the input signal and output the result. When the delayed (copied) signal is mixed with the unprocessed original signal phasing can occur. However, most DAW (digital audio workstation) software come supplied with automatic delay compensation which keeps all tracks time aligned (in sync) by delaying them by the same amount as the track with the greatest latency.

A more controlled way to avoid any form of latency is to use the parallel processor as an insert within the channel and utilising the ‘wet/dry mix’ feature of the processor. Most processors nowadays will provide a ‘mix’ output function that allows a mix of the dry (unprocessed signal) and the wet (processed signal) to run together and in parallel and to be output using a single output path. If the mix knob is set to 100%, or fully clockwise, it only outputs the wet signal. If set to 0 it outputs only the dry unprocessed signal. Anything in-between outputs a combination of the dry and wet signals and is deemed to be in parallel.

The beauty of working in parallel is that you can often use more extreme settings for the parallel process and because the unprocessed/processed tracks will be mixed together the result will not sound as extreme as when using only the processed version.

Distortion

When we talk about distortion the image, invariably, conjured up is that of a guitarist thrashing his guitar with acres of overdrive. Although this gets you in the ballpark there is more to distortion than a Marshall Amp and spandex. What we really mean by distortion is the effect imparted onto a sound when it is run through a non-linear device/process (tubes, solid state amps, pre-amps, tape etc.).

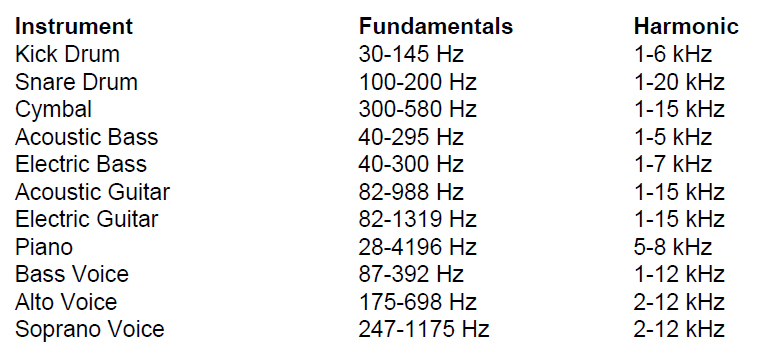

This might sound crazy but I often use distortion instead of equalisation to bring up certain frequencies within a sound and if I do opt for an equaliser (eq) I either reach for a minimum phase design eq or an eq with a drive function (Soundtoys Sie Q being a good example). Depending on the topology used the process can generate harmonics that lend themselves to colouring and enhancing a sound better than most equaliser processes would and with certain sounds, like vocals, that already have acres of harmonic content the process can be extremely useful and musical. I often use something like Soundtoys Decapitator along with an exciter like the Aphex Aural Exciter to process rap vocals. I get an amazing result and often prefer that combination to overdriving a minimum phase equaliser. But, as always, it’s all about trying the various forms of distortion and seeing if the results meet the expectations. With drum sounds we are rarely afforded anything that has a constant pitch bar the standard tone based kick and percussive sounds, and this can work against you when using processors that generate additional harmonics. For harmonics to sound both ‘right’ and musical there needs to be a musical relationship between the fundamental and the generated harmonics and with drum sounds (snares, hi hats, acoustic kick drums etc) that is rarely the case. However, that doesn’t mean distortion cannot be your friend when shaping drum sounds and beats. On the contrary, quite often use of distortion can highlight peak transients, add thickness to the body of the sound and afford a new texture that other dynamic processors struggle to equal. Anything that can overdrive a circuit, or path, will create distortion and how this distortion translates across to a sound is very dependent on the topology used.

I have a whole host of distortion plugins that I regularly use for generating all manner of distortion be it odd and even harmonics, non harmonic, harmonic and so on. I tend to experiment more than pre-empt a result by using the correct processor. Sure, knowing which topology adopted will result in a specific colour is a time saving and solid approach but sometimes it’s the surprises that yield the most creative responses. Don’t get hung up on the physics. Enjoy the process.

A few of my favourite distortion plugins are:

FabFilter Saturn 2 – This is a real weapon to have in your arsenal. The beauty of this plugin is not so much the variety of distortion processes it provides but rather the power of its modulation matrix which can be used to creatively shape the distortion type used. This plugin is the Rolls Royce of distortion.

Soundtoys Decapitator – My go to distortion plugin for processing vocals. However, it affords amazing results when used in parallel and for just about any sound and in particular drum sounds.

Acustica Audio Gainstation – My latest ‘go to’ distortion plugin that sounds very musical straight out of the box and works magic on low frequencies. It has a ton of very useful features that helps to further shape and highlight the result.

Soundtoys Sie Q – A truly wonderful equaliser with a drive function that delivers lovely ‘analogue warmth’. Harmonic distortion reigns supreme with this plugin. Add Radiator and Devil Loc to the list from this great company and you will be perfectly weaponised. Drums beats can really benefit from light use of distortion and these plugins are perfect for that. If you feel brave and want to maul a sound then don’t hold back, hit Radiator with anger!

Klanghelm’s SDRR – One of my favourite plugins SDRR offers manic saturation and more! I use this on almost all snare sounds that need some grit and depth, and it can add sizzle and filth to kick sounds. It can also crush a sound to oblivion if need be.

Lindell 6X-500 – This plugin is a preamp/eq hybrid and offers transformer saturation and sounds amazing on any material. I personally use it to add character to bass sounds and tone based kick drums and its versatility and scope never fails to surprise me.

Waves Cobalt Saphira and Hornet Harmonics – both can generate odd and even harmonics and in combination with the fundamental. You will be surprised at how powerful and musical harmonic generators can be and I implore you to explore this route before jumping on specific distortion topologies.

Exciters – Be they the Aural Exciter, the Aphex 204 or Izotope’s offerings, these plugins are wonderful harmonic generation tools and can impart a character on a sound that is pretty exclusive to the topology. They can work wonders on mid to high frequencies and I often use them to carve out beats that are hidden in a mix.

Saturation – My favourite tape saturator is Acustica Audio’s Taupe simply because it has a great level of control coupled with an amazing sound. Waves J37 is a very good tape saturator plugin as it affords the standard parameters/functions offered on most tape machines. Toneboosters ReelBus is more of a traditional tape machine and can be used to create some subtle or extreme textures. Acustica Audio’s Crimson is for those that want something a little different to the norm in the saturation department. SSL’s X-Saturator is sublime and ‘always’ sounds right and should be added to any serious saturation list.

PSP MixPack2 is a suite that offers a host of plugins that cover all manner of harmonic generation/distortion: from valve/tube, tape saturation etc that are not only detailed but extremely musical.

Plugin Alliance’s BlackBox HG2 is an excellent valve/tube plugin and excels on processing low frequency sounds and anything that nears the air band. But if you are after something more detailed and specific 2nd and 3rd order harmonics processing then Plugin Alliance’s VSM-3 will fit the bill nicely.

Soundevice Digital’s FrontDaw and Royal Compressor are others to consider. FrontDaw works its magic on groups or at the master bus whereas Royal Compressor works on any sound and as an insert effect. Both offer subtle harmonic distortion although Royal can be pushed further as it both compresses and saturates at the same time.

I could go on all day expanding on the list above but to be honest the best approach is to experiment with different types of distortion and don’t get too hung up about the technical merits of the processors used. After all, it all comes down to whether the result works or not and not whether you have obeyed any engineering rules.

Transient Shaper/Design Tools

Nowadays more and more producers head for a transient control/shaping tool before using an equaliser. Whereas equalisers are excellent for working on specific frequencies or a range of frequencies transient shapers use detection circuits to extract and split the incoming audio signal into two distinct parts: transients and body (non-transients), and offer editing and processing functions for each part.

Different transient shapers behave in different ways and offer different tools to process each part, but ultimately they all strive to do the same thing – which is to allow processing of both the attack (transients) and sustain/release (non-transients) portions of a sound independently before being combined at the output stage.

Transient shapers can dynamically alter a sound’s response and thus have become excellent sound design tools. I remember using a hardware transient shaper made by SPL (Transient Designer) to add punch and body to kick and snare sounds, to suck our reverbs from sampled content, and to highlight guitar strums and piano dynamics. I now have and use the software version. Nowadays, we use transient shapers not just for sound design purposes but as mixing tools. Quite often simple transient boosts can yield better results than broadband equaliser boosts when trying to get a sound to cut through the mix. Whereas an equaliser will boost a range of frequencies and thus alter some of the sound’s body content a transient shaper will only boost the attack transients and leave the body as is. Alternatively, transient shapers can work magic on sounds that are transient rich but lack a well controlled and specified body. I have on many occasions resolved imbalances that exist between transients and the body of a specific sound by working on redefining the sustain and release elements of the sound. Guitar lines, piano hits and anything that is either plucked or hit can often display this type of transient/non-transient imbalance and this is where transient shapers excel at becoming corrective tools. Drums benefit greatly from transients shapers and I probably use them more to shape drum sounds and beats than any other frequency dependent processor.

Here are some of my favourite transient shapers.

Boz Digital’s Transgressor 2 is a simple transient shaper with two 4 band equalisers and a basic envelope tool. When it comes to drum processing this is the weapon I use. It also comes with a threshold controlled trigger section and a useful output filtering section. It is remarkably good at processing drum sounds and even the company markets it as a drum shaping tool.

SPL Transient Designer is the original beast that gave rise to all others. Deceptively simple to use and yet powerful and musical, this plugin dynamically adjusts its threshold thus allowing for some unique results. Great for adding punch and bite to transient rich content and excels when it comes to processing percussive sounds. Additionally, and thanks to its clever design, it can suck reverb off reverb rich content which can be a a lifesaver when dealing with over processed drum samples.

Eventide Physion is the daddy of all transient shapers as it not only splits the transients from the body but also offers a ton of additional juicy Eventide effects and dynamics to use on each part. It would take a whole page to list the features of this plugin. However, suffice to say; this is my ‘go to’ plugin when it comes to deep sound design and corrective and coloured mix processing. It truly excels at processing plucked instruments, percussive sounds and anything that has inharmonic content (bells, gongs etc). Don’t think of this processor as just a transient shaper. It is far more than that.

Sonible Entropy Q+ is without a doubt one of the most creative tools I have in my weapons locker. Although billed as an equaliser I still regard it as a transient shaper with a twist. It can differentiate between harmonic and inharmonic (deemed as noise in this example) sound content and thus simplifying the postproduction of transients. The plugin’s functions can shape and highlight attack transients and generate additional harmonics. It is unmatched when it comes to processing dialogue/vocals and complex and rich instrument sounds. I use it on just about every sound and what it can achieve is both detailed and musical. For drum beats it can be obscenely creative.

Acustica Audio Diamond Transient is a two plugin suite of dynamic processing tools that emulate select iconic American dynamic processors. It is far more than a transient shaper and coming from the famous Acustica Audio stable you are guaranteed that it will sound ‘analog’. This is another one of those ‘gem’ finds in that it offers all the required editing tools you’d expect from this topology but more importantly sounds extremely musical. It does to drum sounds what Sharon Stone did for interviews.

All in all, transient shapers are the way forward in manipulating sounds in an ever evolving industry that is intimately governed by technology. Tools like the above point to a new form of mixing and production ethos that encapsulates both the creative and corrective aspects of sound design.

Clippers

There is a distinction between clipping in the digital domain and clipping as an effect (analogue). In a digital system clipping (exceeding the headroom of the system) produces anharmonic distortion (non-musically generated distortion components), through aliasing. In an analogue system overdriving the input stage of an A/D generates harmonic series of distortion components that, thanks to the anti-aliasing filter and the removal of distortion components above the Nyquist frequency, sounds like analogue distortion. With most software clippers hard and soft clipping options are provided to further shape the audio. Hard clipping flattens and squares off the waveform when the ceiling is exceeded and results in odd order harmonics being generated. Soft clipping is far less aggressive and introduces limiting prior to exceeding the ceiling threshold and results in a more rounded clipped waveform. Modern day software clippers try to emulate both analogue and digital clipping and offer a number of different clipping algorithms with the intention of providing harmonic distortion and gain control. They are great tools for achieving loudness and punch, be it on individual sounds or wideband in a mix context, and can be used creatively to introduce different types of harmonic distortion onto a sound or to push certain sounds through a mix.

Clippers are weapons when it comes to mauling drum sounds. You can shape tonal kick drums, like the 808 bass drum, and push them through a mix or colour them with a little fuzzy distortion which rounds them off and gives a warm gentle distorted response, or you can push the envelope and work magic for electronic drums by ‘glitching’ them for EDM genres.

Not all clippers are the same. Sure, in essence they all do the same thing but some do it better, or differently, than others. I base all my clipper buying choices on what features are provided and how well those features are integrated and implemented. Here are a few I use and can therefore recommend wholeheartedly:

Kazrog KClip can be transparent or coloured and can work on any material. It offers a number of very useful algorithms from soft to hard clipping and pretty much everything in between. The oversampling feature is a winner and this is one plugin that has low aliasing noise which equates to a more solid balanced result when driven.

IK Multimedia Classic Clipper does exactly what it says on the tin and does it well. I use it for mastering which is a testament to how well it performs. It saturates beautifully. It has a variable slope which allows for ultra soft clipping to manic hard clipping and everything in between. Magical on drum sounds.

Acustica Audio Gainstation is not the most detailed of the clippers listed here but it is without a doubt the most musical. What I love about this clipper is not just the fact that it provides a number of clipping modes that afford various levels of clipping, but that it sounds ‘analogue’. The plugin also affords additional dynamic shaping tools that can be configured in all manner of routings that help to manage the entire signal from input to output. To say it made my 808 kicks sound Jedi would be an understatement.

Waves Infected Musroom has been around for a while now and is still as powerful today as it ever was. Very intuitive musically and can achieve solid results on just about any material. It s quirky in nature but amazingly powerful. It does things to drum sounds that really should carry a government health warning.

Boz Digital’s Big Clipper is another winning product from this wonderful company. What makes this plugin so powerful is the fact that it combines clipping and limiting together which translates across to better control and balance, and the fact that is has a 3 band sensitivity control which offers the user control over how the clipper/limiter responds to different parts of the frequency spectrum makes this a very useful and intuitive processor to use.

Siraudiotools StandardClip is as good as it gets in the world of clipping. Although it does not boast the feature sets of the competitors listed above make no mistake this clipper is one of the best available. For me its extended oversampling rates result in various levels of transparency which makes this a wonderful mastering tool.

Tokyo Dawn Labs TDR Limiter 6 GE is a modular based dynamics processor that comes with one of the best clippers I have ever used. The modular approach is both powerful and extremely flexible and that hits home as an all in mastering solution. But we are here to talk about clippers and TDR’s clipper module is simply gorgeous. For mastering this is my go to processor. For mauling basses, kicks and snares, this clipper nails it every time. Having the additional dynamics modules allows for even more detailed control of the clipped signal.

We could delve into drum processing tools all day long but that would make this article a Tolstoy affair and quite frankly I don’t have enough caffeine to venture into that realm. Today the world of production has grown into one of sound design coupled with corrective processes and highlighted with colouring goals. Thank Enke for technology.

The following video demonstrates how some of the plugins in this article can dramatically change a sound.

Eddie Bazil

If you found this article to be of use, then these might also interest you: